I’m just back from another great week out at SMX West.

One of the panels I went to this year was “Optimizing for Voice Search and Virtual Assistants.” This topic was of great interest to me because of all the work we are doing in this area, and I wanted to hear and learn what others were doing.

The panelists discussed some great things. Here’s my recap.

Jason Douglas, director of product management for Actions on Google

If you’re not familiar with the term “Actions on Google,” it refers to what you call the apps that can be built to run on Google Assistant. Jason’s presentation provided insights into what Google is seeing on the way people are using voice and the Google Assistant app.

Jason did not provide his slides, so the screen shots below are pictures from my camera.

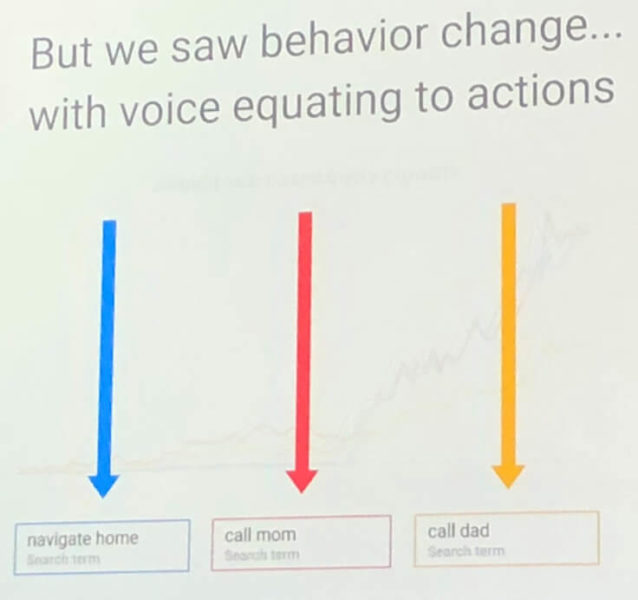

One of his first observations is that people use voice quite differently from the way they use traditional search. The commands tend to be far more action-oriented.

When it comes to personal assistants, people have more of a “get things done” mindset.

In the longer term, artificial intelligence (AI) should greatly expand the capabilities of what you can do.

For example, you will be able to search for photos of your child by using his or her name or searching for photos of people hugging and so on.

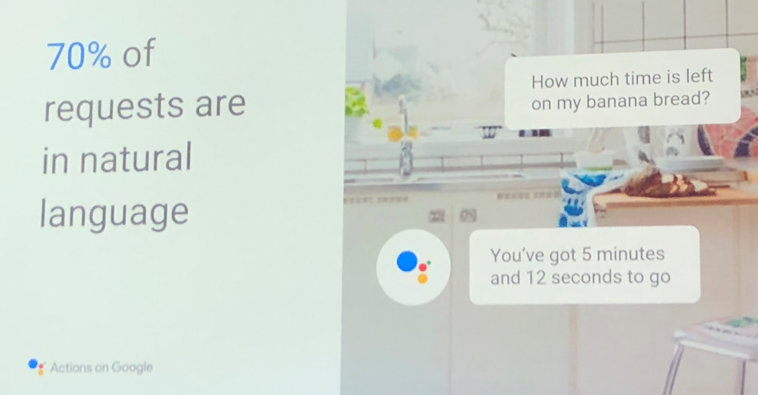

Users will begin to expect these types of capabilities more and more. They already interact far more with natural language when using voice to request a device to do something.

In fact, 70 percent of all voice queries are already in natural language format.

You will also be able to interact across devices. You’ll have one personal assistant living in the cloud, and you’ll be able to access that one assistant from any device you choose.

The personal assistant is already present on more than 400 million devices today. The number and types of devices will continue to grow over time (For example, Smart displays are coming out later this year).

You will be able to use multiple input modes, not just voice: typing, tapping, voice, and even Google Lens.

Jason also placed a heavy emphasis on structured data markup, as Google wants people to move in this direction.

As an example, accessing recipe content via Google Home is only possible if the site with the recipe has implemented it with structured data markup.

Google is moving quickly in the direction of enabling commerce in the process as well; an application program interface (API) already exists in Google Pay to support this (available only in the US and the UK at the moment).

Jason shared the merits of developing an Actions on Google App. From my experience, it’s not that hard to do, and it can provide some great brand benefits in terms of reputation and visibility.

One thing that Jason did not mention is that these apps do not require a user to install them; they’re simply present. If the user knows an app’s name, they can start using it immediately.

Further, even if the user doesn’t know the app name, Google will sometimes prompt the user, in response to a user question, and ask them if they want the answer from your app.

In other words, Google Assistant can help raise your visibility.

Many brands have already gone down this path. Jason shared a sample of them:

Arsen Rabinovitch, TopHatRank

Arsen was up next. He started off with some stats, and I found the data on user intent particularly interesting.

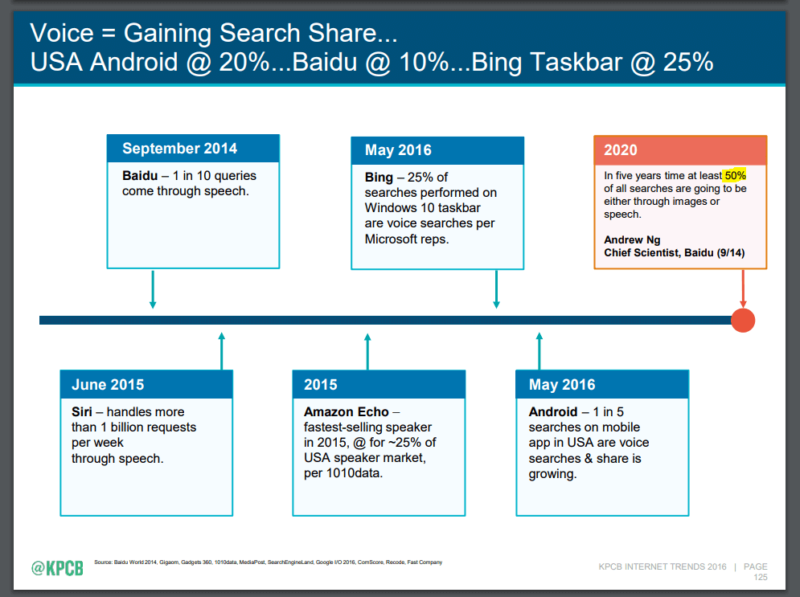

The data attributed to comScore, showing that 50 percent of all queries will be done by voice in 2020, has been around awhile, though I believe the original source for that is a quote from Baidu Chief Scientist Andrew Ng. What’s interesting is that Gartner pegs the same figure at 30 percent.

Editor’s note: KPCB’s 2016 Internet Trends Report credits a 2014 study by Baidu World with first publishing the statement, “In five years, we think 50 percent of queries will be on speech or images,” by Andrew Ng, former head of Baidu Research.

While the Gartner number is quite a bit lower, I think the comScore/Andrew Ng number is too high, and 30 percent is still a stunning number. That suggests that this is coming at us like a freight train.

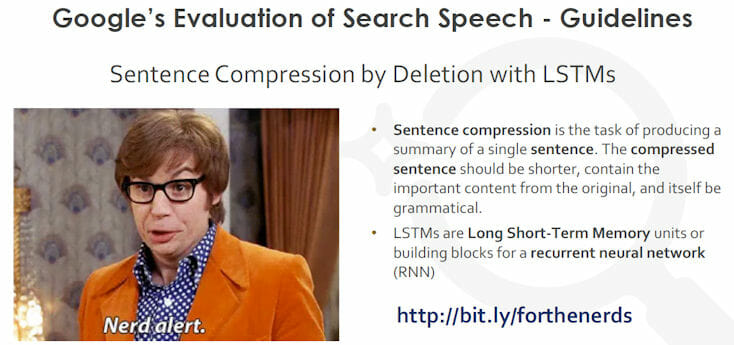

One great resource Arsen shared is the Google Evaluation of Search Speech guidelines. If you’re into voice search, you need to get this guide and go through it in detail.

Another important topic Arsen discussed was sentence compression.

This is the concept of reducing the volume of content to make it less wordy, but with no or minimal content loss. This leverages a concept known as long-term/short-term memory units and recurrent neural network algorithms.

Arsen also shared some examples to illustrate where the results for Google Home come from.

For example, for the query, “Who plays Rick Grimes in the Walking Dead,” the entity search results data is extracted from Wikipedia.

The query, “How many Walking Dead comics are there,” produces a featured snippet result from Skybound.com.

In contrast, for the query, “Where can I buy The Walking Dead comics?,” Google Home assumes you want local results and will share information for you from the map results.

There is no local pack in the regular search results for this.

Why track all of this? Because it tells you what you need to try to optimize to participate!

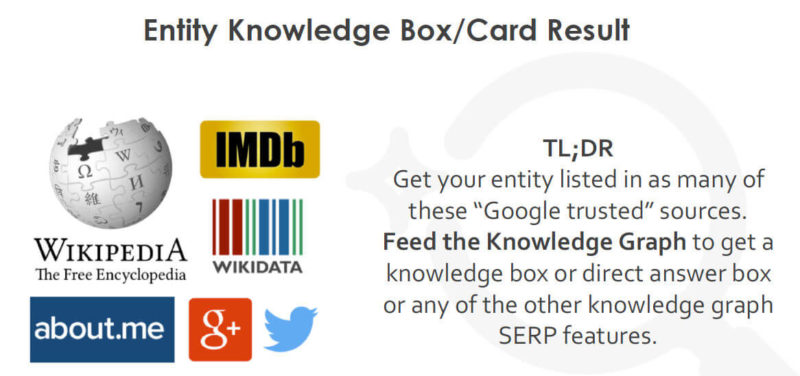

Arsen also shared some insights on how to get Google to show Knowledge Boxes for your entity. The key here is to feed Google the data the way it wants it:

When you get your data listed in all of these trusted sources, it increases Google’s confidence in the information and makes it far more likely you will get a Knowledge Box in search results or a Knowledge Card on a smartphone for your entity.

When researching what search phrases to seek a featured snippet on, Arsen offered a series of useful tips.

First, Answer the Public is a great tool to use to research target phrases of interest to people:

You can also use a tool like SEMRush to research what search phrases are near-term opportunities for your site. For one thing, you should look to see what featured snippets you’re already getting:

Once you see the snippets, go visit these search results and see if what you’re showing is a good and complete answer to the user’s question.

If it isn’t, invest the time to make it better by creating better content.

Keep in mind that Google is always evaluating the user response to featured snippets, so just because you have one today doesn’t mean you can’t lose it.

Taking the time to defend the snippets you have is a great investment of your time.

You can also take a step forward and investigate the opportunities to get new featured snippets:

By seeing which keywords and content you have already ranking in the top 10, you can see where you might be poised to obtain new featured snippets.

Once you’ve identified these, you can begin working on the content to improve your chances.

Here’s my take: I see a lot of people sharing regarding featured snippets being 50 words or less. This is true, but it does not mean you should be making pages that are 50 words or less or generating frequently asked questions (FAQ) pages with many short answers and expecting those pages to produce snippets. In my experience, Google prefers pages that answer the direct user question in 50 words or less, is also embedded in content and answers many of the user’s related questions. I prefer information-rich pages that contain those short direct answers and a lot of supporting information.

Here is Arsen’s slide deck:

Benu Aggarwal, Milestone Internet

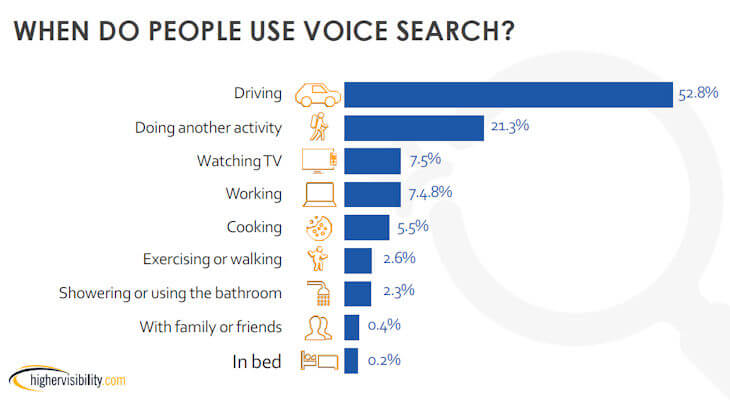

Benu shared some market data in the beginning as well. Tthe data she shared from a HigherVisibility study published in February of 2017 was very interesting:

Per this data, the predominant use for people using voice search is when they are driving. For now (as of February 2017), usage is dominated by situations in which typing is inconvenient. You can see that in some of the other scenarios, too: cooking, exercising, walking, showering or using the bathroom.

This suggests voice search is still a developing capability. It’s also consistent with the fact that conversations with devices remain hit-or-miss in nature, and having to repeat commands over and over, and the need for a precise syntax remains an issue.

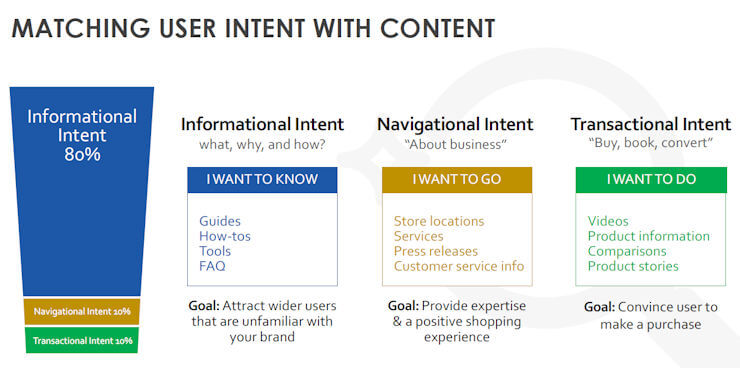

Benu also made some great points about tailoring your content to the varying intents of the user:

This is good classic search engine optimization (SEO) and marketing wisdom, but it’s critical to keep it in mind when working on developing content that virtual assistants might use to respond to user queries.

Note the emphasis on informational content here, too, with Benu pegging the focus at 80 percent.

I like this positioning because of the critical role that filling the top of your sales funnel plays in any business, and it’s also where the largest opportunities lie for gaining visibility from the personal assistants.

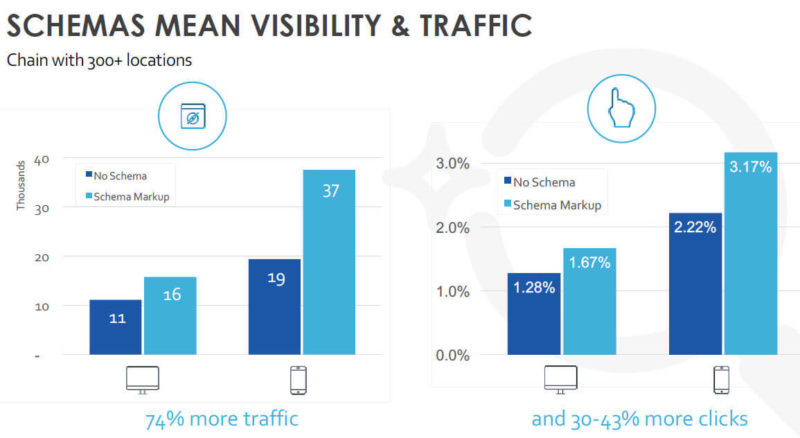

Benu also shared some great data on the impact of Schema on visibility and traffic. The data is from a hotel chain with more than 300 locations:

Marking up those locations with the appropriate schema certainly had a dramatic impact. This is even without considering the role this can play in improving your chances of appearing in featured snippets and voice search results that schema could potentially play.

Tests I’ve run show no indication that schema currently helps you get featured snippets for information queries, but it certainly helps with knowledge boxes for entity information.

Jason Douglas from Google emphasized that schema is important, too. We’ve heard similar things from other Google reps over the past few months, so it’s best to take their advice to heart.

And, as Benu’s data shows, schema can offer you some traffic gains right now.

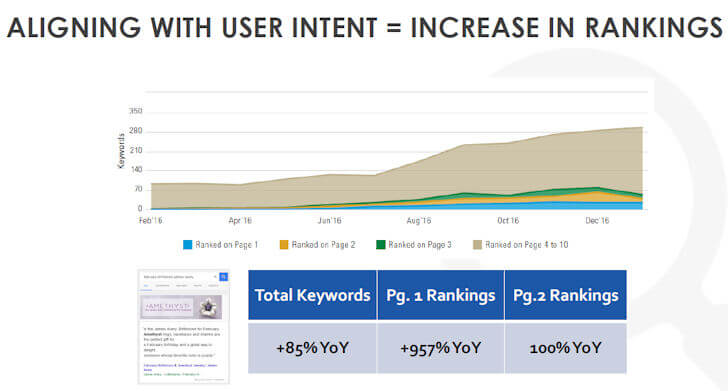

Benu also reminded us again of the importance of aligning with user intent, this time showing the power this can offer your business where it counts:

After all, it’s the user we’re all here to serve, and developing the right content strategy can go a long way toward bringing the results we’re all looking for.

Here is Benu’s presentation:

Summary

Overall, this was a great session, with all three speakers bringing important information. The rise of voice is real, and it will continue to grow, though it might not be as fast as some predictions indicate.

As an aside, I think the label “voice search” is not a great one, because this is about much more than search. Voice commands I might use are:

- “Alexa, turn on the living room lights.”

- “OK, Google, play Gimme Shelter.”

- “Hey Google, directions to Whole Foods.”

- “Hey Cortana, book dinner for two tonight at Bertucci’s.”

- “Hey Siri, please call _________.”

These are actions we are using the personal assistants to perform on our behalf. Personally, I prefer the term “conversational computing” for this part of it.

But remember, this is not just about voice interactions, it is also about our personal assistants, which are rapidly growing. Together they represent a great marketing opportunity for all of us.

The post Session recap from SMX West: Optimizing for voice search and virtual assistants appeared first on Search Engine Land.

No comments:

Post a Comment