Paid search is an industry that’s grounded in data and statistics, but one that requires practitioners who can exercise a healthy dose of common sense and intuition in building and managing their programs. Trouble can arise, though, when our intuition runs counter to the stats and we don’t have the systems or safeguards in place to prevent a statistically unwise decision.

Should you pause or bid down that keyword?

Consider a keyword that has received 100 clicks but hasn’t produced any orders. Should the paid search manager pause or delete this keyword for not converting? It may seem like that should be plenty of volume to produce a single conversion, but the answer obviously depends on how well we expect the keyword to convert in the first place, and also on how aggressive we want to be in giving our keywords a chance to succeed.

If we assume that each click on a paid search ad is independent from the others, we can model the probability of a given number of conversions (successes) across a set number of clicks (trials) using the binomial distribution. This is pretty easy to do in Excel, and Wolfram Alpha is handy for running some quick calculations.

In the case above, if our expected conversion rate is 1 percent, and that is indeed the “true” conversion rate of the keyword, we would expect it to produce zero conversions about 37 percent of the time over 100 clicks. If our true conversion rate is 2 percent, we should still expect that keyword to produce no conversions about 13 percent of the time over 100 clicks.

It isn’t until we get to a true conversion rate of just over 4.5 percent that the probability of seeing zero orders from 100 clicks drops to less than 1 percent. These figures may not be mind-blowingly shocking, but they’re also not the types of numbers that the vast majority of us have floating in our heads.

When considering whether to pause or delete a keyword that has no conversions after a certain amount of traffic, our common sense can inform that judgement, but our intuition is likely stronger on the qualitative aspects of that decision (“There’s no obvious difference between this keyword and a dozen others that are converting as expected.”) than the quantitative aspects.

Achieving a clearer signal with more data

Now consider the flip side of the previous scenario: if we have a keyword with a true conversion rate of 2 percent, how many clicks will it take before the probability of that keyword producing zero conversions falls below 1 percent? The math works out to 228 clicks.

That’s not even the heavy lifting of paid search bidding, where we need to set bids that accurately reflect the underlying conversion rate of a keyword, not just rule out extreme possibilities.

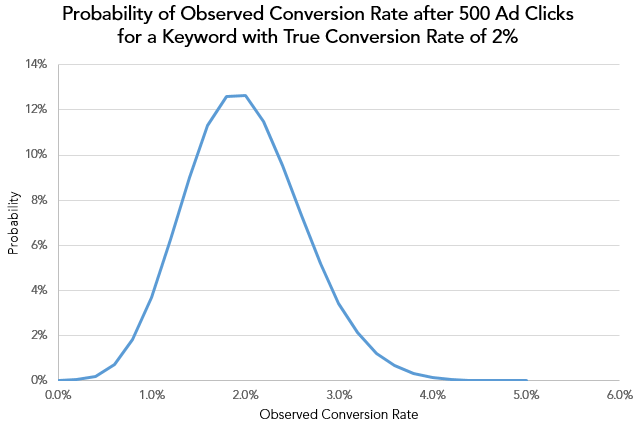

Giving that 2 percent conversion rate keyword 500 clicks to do its job, we’d be right to assume that, on average, it will generate 10 conversions. But the probability of getting exactly 10 conversions is a little under 13 percent. Just one more conversion or less and our observed conversion rate will be 10 percent different from the true conversion rate (running at either 1.8 percent or less, or 2.2 percent or more).

In other words, if we are bidding a keyword with a true conversion rate of 2 percent to a cost per conversion or cost per acquisition target, there is an 87 percent chance that our bid will be off by at least 10 percent if we have 500 clicks’ worth of data. That probability sounds high, but it turns out you need a really large set of data before a keyword’s observed conversion rate will consistently mirror its true conversion rate.

Staying with the same example, if you wanted to reduce the chance of your bids being off by 10 percent or more to a probability of less than 10 percent, you would need over 13,500 clicks for a keyword with a true conversion rate of 2 percent. That’s just not practical, or even possible, for a great many search programs and their keywords.

This raises two related questions that are fundamental to how a paid search program is bid and managed:

- How aggressive do we want to be in setting individual keyword bids?

- How are we going to aggregate data across keywords to set more accurate bids for each keyword individually?

To set a more accurate bid for an individual keyword, you can essentially wait until it has accumulated more data and/or use data from other keywords to inform its bid. Being “aggressive” in setting an individual keyword’s bid would be favoring using that keyword’s own data even when the error bars on estimating its conversion rate are fairly wide.

A more aggressive approach supposes that some keywords will inherently perform differently from even their closest keyword “cousins,” so it will ultimately be beneficial to more quickly limit the influence that results from related keywords have on individual keyword bids.

For example, one of the simplest (and probably still most common) ways that a paid search advertiser can deal with sparse individual keyword data is to aggregate data at the ad group level or up to the campaign or even account level. The ad group may generate a one percent conversion rate overall, but the advertiser believes that the true conversion rate of the individual keywords varies a great deal.

By bidding keywords completely by their own individual data when they have achieved 500 or 1,000 clicks, the advertiser knows that statistical chance will lead to bids that are off by 50 percent or more at any given time for a non-trivial share of the keywords achieving that level of volume, but that may be worth it.

For a keyword with a true conversion rate of 2 percent, observed conversion rate will differ by plus or minus 50 percent from the true conversion rate about 15 percent of the time, on average, after 500 clicks, and 3 percent of the time after 1,000 clicks. If the alternative is for that keyword to get its bid from the ad group (based on its one percent conversion rate), then that will still be better than having a bid that is 50 percent too low 100 percent of the time.

This speaks to the importance of wisely grouping keywords together for bidding purposes. For an advertiser whose bidding platform is confined to using the hierarchical structure of their AdWords paid search account to aggregate data, this means creating ad groups of keywords that are likely to convert very similarly.

Often this will happen naturally, but not always, and there are more sophisticated ways to aggregate data across keywords if we don’t have to confine our thinking to the traditional ad group/campaign/account model.

Predicting conversion rate based on keyword attributes

There is a lot we can know about an individual keyword and the attributes it shares with keywords that we may or may not want to group in the same ad group or campaign for any number of reasons (ad copy, audience targeting, location targeting and so on)

The number of keyword attributes that could be meaningful in predicting conversion rates is limited only by an advertiser’s imagination, but some examples include attributes of the products or services the keyword is promoting:

- product category and subcategories;

- landing page;

- color;

- size;

- material;

- gender;

- price range;

- promotional status;

- manufacturer and so on.

We can also consider aspects of the keyword itself, like whether it contains a manufacturer name or model number; the individual words or “tokens” it contains (like “cheap” vs. “designer”); whether it contains the advertiser’s brand name; its match type; its character length and on and on.

Not all attributes of a keyword we can think of will be great predictors of conversion performance or even generate enough volume for us to do a useful analysis, but approaching bidding in this way opens up our possibilities in dealing with the problem of thin data at the individual keyword level. Google itself has dabbled in this line of thinking with AdWords labels, though it has its limits.

When considering multiple keyword attributes in paid search bidding, the level of mathematical complexity can escalate very quickly, but even approaches on the simpler end of the spectrum can be effective at producing more accurate keyword bidding decisions.

Closing thoughts

I’ve really just scratched the surface on the topic of predicting keyword conversion rates and the basic statistics that surround paid search bidding. Most advertisers also have to consider some form of average order size or value, and seasonality can have a huge effect on where we want our bids to be.

Paid search bidding has also only grown more complex over time as properly accounting for factors like device, audience and geography have grown more important.

Clearly, there are many moving pieces here, and while our intuition may not always be sound when scanning through monthly keyword-level performance results, we can trust it a bit more in assessing whether the tools we are using to help us make better decisions are actually doing so smartly and delivering the kind of higher-level results that meet our expectations over the long term.

The post Coaxing smarter paid search bidding decisions out of sparse conversion data appeared first on Search Engine Land.

No comments:

Post a Comment